Topic 2 Regression Assumptions

2.1 Discussion

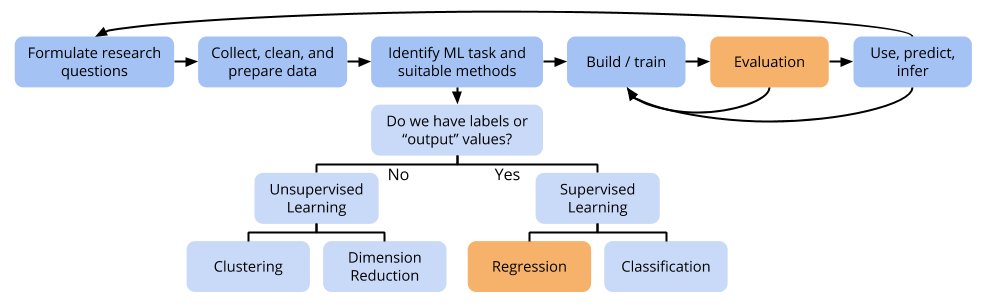

Regression tasks

In regression tasks, our goal is to build a model (a function) \(f\) that predicts the quantitative response values well:

\[y = f(x) + \varepsilon\]

- \(y\): quantitative response variable

- \(x = (x_1, x_2, \ldots, x_p)\): are \(p\) explanatory variables, predictors, features

- \(f(x)\) is a function that captures the underlying trend/relationship between the response and the predictors

- \(\varepsilon\) is random unexplainable error/noise

The linear regression model you learned about in MATH 155 is a special case of \(f\):

\[ \begin{align*} y &= f(x) + \varepsilon \\ &= \beta_0 + \beta_1 x_1 + \beta_2 x_2 + \cdots + \beta_p x_p + \varepsilon \end{align*} \]

Evaluating regression models

- Should meet assumptions required for statistical inference

- Should explain a substantial proportion of the variation in the response

- Should produce accurate predictions

Linear regression assumptions

\[\varepsilon \stackrel{ind}{\sim} N(0, \sigma^2)\]

Assumptions are to ensure that statistical inference procedures (p-values, confidence intervals) “work as advertised”:

- The process of creating a 95% CI is a procedure (add and subtract about 2 standard errors from the estimate).

- It “works as advertised” if in 95% of possible samples it creates intervals that contain the true population value. (The other 5% of samples are unlucky ones.)

- It does not “work as advertised” if only 90% of possible samples result in intervals (95% CIs) that contain the true population value.

2.2 Exercises

You can download a template RMarkdown file to start from here.

We will continue looking at the New York housing data. Each case (row) in the dataset is a house in New York, and on each house, we have information on several variables. We’ll focus on the response variable Price (in dollars) and a single predictor variable Age (in years).

# Load required packages

library(ggplot2)

library(dplyr)

# Read in the data

homes <- read.delim("http://sites.williams.edu/rdeveaux/files/2014/09/Saratoga.txt")

# Look at the first 6 rows

head(homes)## Price Lot.Size Waterfront Age Land.Value New.Construct Central.Air

## 1 132500 0.09 0 42 50000 0 0

## 2 181115 0.92 0 0 22300 0 0

## 3 109000 0.19 0 133 7300 0 0

## 4 155000 0.41 0 13 18700 0 0

## 5 86060 0.11 0 0 15000 1 1

## 6 120000 0.68 0 31 14000 0 0

## Fuel.Type Heat.Type Sewer.Type Living.Area Pct.College Bedrooms

## 1 3 4 2 906 35 2

## 2 2 3 2 1953 51 3

## 3 2 3 3 1944 51 4

## 4 2 2 2 1944 51 3

## 5 2 2 3 840 51 2

## 6 2 2 2 1152 22 4

## Fireplaces Bathrooms Rooms

## 1 1 1.0 5

## 2 0 2.5 6

## 3 1 1.0 8

## 4 1 1.5 5

## 5 0 1.0 3

## 6 1 1.0 8We will pretend that the homes dataset contains the full population of New York houses. Let’s draw a random sample of 500 houses from the “population”. We can do this with the sample_n() function available in the dplyr package:

# Randomly sample 500 homes

homes_sample <- sample_n(homes, size = 500)- Checking the independence assumption

In thinking about what the cases are, do you think the independence assumption holds?

- A first try at a model

Visualize the relationship between house price and house age with a scatterplot and smooth trend line. How would you describe the overall shape of the trend? Is it linear?

ggplot(homes_sample, aes(x = ???, y = ???)) + geom_???() + geom_smooth()Using our sample (

homes_sample), fit a linear regression model wherePriceis predicted byAge:# Fit the model mod1 <- lm(Price ~ Age, data = homes_sample) # Display the summary table summary(mod1)Check the trend and homoskedasticity assumptions by plotting the residuals versus the fitted (predicted) values. The points should be evenly scattered around the y = 0 line. Do you think these assumptions are met?

# Put the residuals and predicted values into a dataset mod1_output <- data.frame( residual = residuals(mod1), predicted = fitted.values(mod1) ) # Plot ggplot(mod1_output, aes(x = ???, y = ???)) + geom_point() + geom_smooth(color = "blue", lwd = 3) + # Add a smooth trend geom_hline(yintercept = 0, color = "red") # Add the y = 0 lineCheck the normality assumption by making a QQ-plot of the residuals. In a QQ-plot, each residual (y-axis) is plotted against its theoretical corresponding value from a standard normal distribution (\(N(0,1^2)\)) on the x-axis. That is, the first quartile of the residuals is plotted against the first quartile of \(N(0,1^2)\), the median of the residuals is plotted against the median of \(N(0,1^2)\), and so on. If the residuals follow a normal distribution, then the points should fall on a line. Do you think the normality assumption holds?

ggplot(mod1_output, aes(sample = residual)) + geom_qq() + geom_qq_line()

- A second model

The diagnostic plots we made above suggest that key assumptions are not being met. Let’s explore how transforming variables can help us meet those assumptions.One of the most common variable transformations that can help fix an unmet homoskedasticity assumption is a logarithmic transformation of the response variable. We will also try to better model the nonlinear shape of the

Pricevs.Agetrend by using a logarithmic transformation. Themutate()function in thedplyrpackage allows us to create these new transformed variables:# log() = natural logarithm # The %>% is a "pipe": it takes the dataset to the left and provides it as input to the code that follows homes_sample <- homes_sample %>% mutate( log_price = log(Price), log_age = log(Age + 1) # Some Age's are 0, so add 1 to prevent log(0), which is undefined )Fit a linear regression model where

log_priceis predicted bylog_ageand obtain the residuals and fitted values:mod2 <- lm(???, data = homes_sample) mod2_output <- data.frame( residual = residuals(???), predicted = fitted.values(???) )Check the trend, homoskedasticity, and normality assumptions for

mod2. Do these assumptions seem to hold better formod1ormod2?

- Implications for statistical inference

Since we have the entire population of New York homes, we can investigate whether or not confidence intervals “work as advertised” for the two models we investigated.Display the 95% confidence intervals for the coefficients in

mod1andmod2with theconfint()function:By fitting theconfint(mod1) confint(mod2)Price ~ Agemodel in the full population, we know that the true population value of theAgecoefficient is -636.2551305.

And by fitting thelog_price ~ log_agemodel in the full population, we know that the true population value of thelog_agecoefficient is -0.134966.

Does the 95% confidence interval “work as advertised” in this case?But what we did in part a just looked at one sample. If the 95% CI truly were working as advertised, 95% of samples would create 95% CIs that contain the true population value. We can run some simulations and see how 95% CIs “work” in 1000 different samples.

We find that formod1, 95% CIs contain the true value of theAgecoefficient 899 times.

We find that formod2, 95% CIs contain the true value of thelog_agecoefficient 964 times.

With regards to statistical inference, what can you conclude about assumption violations and fixing those violations?